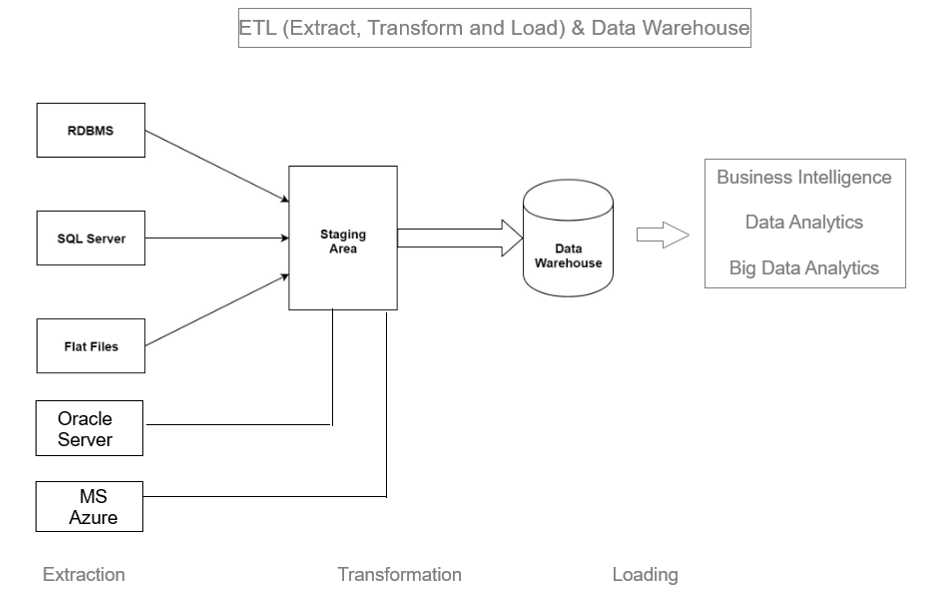

Extract, transform, and load (ETL) is the process of combining data from multiple sources into a large, central repository called a data warehouse. ETL uses a set of business rules to clean and organize raw data and prepare it for storage, Business Intelligence, Data Analytics, and Machine Learning (ML).

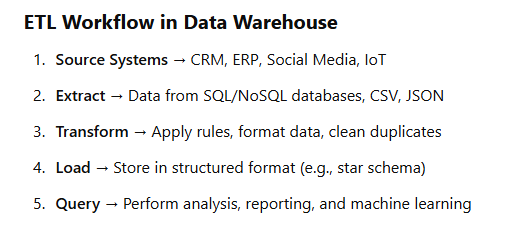

Collect raw data from various sources (databases, APIs, flat files, etc.).

Step [1]- Extract Data: ETL process is used to extract data from various sources such as transactional systems, spreadsheets, and flat files. This step involves reading data from the source systems and storing it in a staging area.

Clean, filter, and format raw data to match the data warehouse schema.

Step [2] – Transform Data: The extracted data is transformed into a format that is suitable for loading into the data warehouse. This may involve cleaning and validating the data, converting data types, combining data from multiple sources, and creating new data fields.

Store transformed data into the data warehouse for reporting and analysis.

Step [3] – Load Data: Once Data transformed, it is loaded into the data warehouse. This step included creating the physical data structures and loading the data into the warehouse.

ETL Working Flow in Data Warehouse